2023 Elections; Deepfake And A New Way Of Commuting Old Crimes.

By Testimony Akinkunmi

ABSTRACT

Synthetic media are also known as Deepfakes, and they refer to face-swapping technologies that enable the quick creation of fake images or videos which appear incredibly realistic. Deepfakes leverage powerful techniques from machine learning and artificial intelligence to manipulate or generate visual and audio content with a high potential to deceive. With this high ability to deceive, there is a temptation for lawmakers to overlook its ground-breaking advantages but instead create restrictive laws to govern its usage. In this article, we would show that since the societal Institutions have dealt with and are dealing with other forms of misused inventions (Camera, Internet, and Social Media). Any regulation to be put in place should therefore not be as ominous and a clog to it technological development especially in relationship with the elections.

A. INTRODUCTION

Nigeria’s misunderstanding of technological advancement has beared a blot since independence. In Africa Rise and Shine by Jim Ovia, founder of Zenith Bank, says; ‘unfortunately, the Nigerian military government did not agree…they thought our dish was being used to spy on the country…it was pulled down and transmission stopped.’ This denied Nigeria, the golden opportunity of having the internet first in Africa, for a significant period.

This reluctance by the military Government played out in our democracy in another way in recent years under the Mohammadu Buhari administration. A hate speech bill was introduced to regulate what could be said on the internet. A legal practitioner noted that ‘the wordings of the Bill are very broad and contain provisions which appear to generalize every insulting or abusive word on a particular ethnic group as hate speech. There is a thin line between hate speech and offensive speech, since not all forms of offensive speech can be categorized as hate speech.’ Apart from that, the punishment of death by hanging was included in the first draft of the bill, which was thankfully removed due to public outcry.

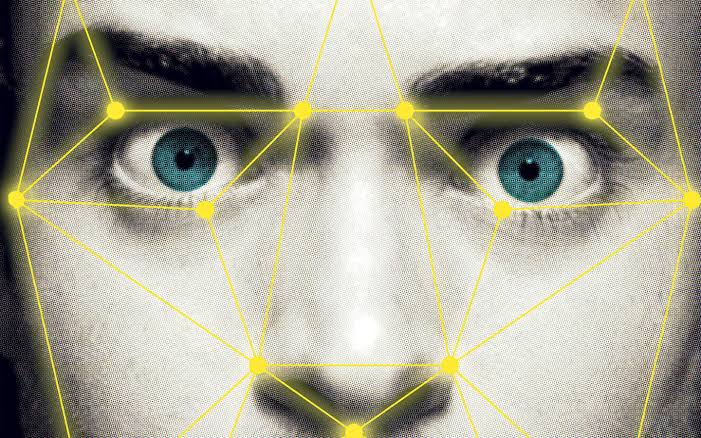

A deepfake is a video or audio content which has been manipulated using artificial intelligence to make it appear that a person is doing or saying something which is not real. For example, face replacement or “face swapping” involves stitching the image of someone else’s face over another and speech synthesis involves modelling someone’s voice, so that it can be used in a video to make someone appear they are saying something they are not.

Whilst deepfakes have been used to great effect in the film and advertising industry, for improved CGI, there has also been a growing misuse of deepfakes. To date, the most prevalent use of deepfakes is face replacement pornography, where the likeness of an individual, most often a celebrity, has been used in conjunction with a porn star’s body.

There has also been a growing use of deepfakes for fake videos of politicians, which has the potential to be extremely disruptive on a very large scale, for example, distorting political elections or manipulating public opinion. Although the technology is not sophisticated enough yet for it to be impossible to detect a deepfake, the technology is constantly improving.

B. BENEFITS

I. EDUCATION

Nigerian students repeatedly complain of how the Nigerian educational system is ineffective and irrelevant to them after graduating. Synthetic Media Deepfake brings an interactive edge to the education landscape. Benjamin Franklin says“Tell me and I forget, teach me and I may remember, involve me and I learn.” With Deepfake, there is an opportunity for interactive learning and when blended with virtual reality, Nigerian students can increasingly compete with their mates in another part of the world.

Samsung’s AI and researchers at the Skolkovo Institute of Science and Technology have demonstrated Deepfake technology that brings still images such as the Mona Lisa and photos of Albert Einstein to life. Let’s also imagine a visual representation of Newton watching the apple fall and how his mind was reasoning at that time, such a synthetic re-enactment video will have a greater impact on students minds.

II. HEALTH

In a world where there is a higher tendency to get terminal health like brain tumours, cancer and so many others, it is only right to use even better means to face this. Nvidia, the Mayo Clinic and the MGH & BWH Center for Clinical Data Science, collaborated on using generative adversarial networks to create ‘fake’ brain MRI scans. The researchers found that, by training algorithms on these medical images and on 10% real images, these algorithms became just as good at spotting tumours as an algorithm trained only on real images. This will make it faster and more efficient to note such growth and cure when possible.

III. PERSONALIZATION

In commerce transactions, there is always that feeling that we wouldn’t make the right commercial choice. However, Deepfake enables you as a customer to edit your avatar to how you look, then to try on the latest fashion trends. The Deepfake approach even allows brands to create a virtual trial room. Here, users can try out products before purchasing them. Retailers can also engage customers at home by creating a mixed reality world powered by AI. It would allow them to try on furniture and decorate their space.

In playing games, Avatars can be the key to offering more intense and satisfying game experiences. They can increase the feeling of being transported to another world, provide an enhanced sense of agency, and satisfy the need to feel connected to others. In the process, they make games more fun and engaging, and players more loyal

IV. CREATIVITY

Deep fakes can also have significant positive uses for creative commercial and social purposes. For instance, CereProc28 is a corporation that uses deep fake technology to create voices for people who lose theirs from disease. Similarly, generative adversarial networks (GANs) technology could mean significant cost and time savings in creating artificial videos. Even nowadays, video production today is highly unscalable: it is a physical process involving many cameras, many studios, and many actors.

With GAN technologies, synthetic videos can be produced at ten per cent of a regular cost.

The most notable illustration is a video of David Beckham recorded in English, which aims to raise awareness about Malaria Must Die initiative. Beckham’s face and voice was deep-faked to enable him to “speak” in nine languages.

C PROBLEMS

I. SCAM

Deepfakes are particularly suitable for scams. The goal is to get money or access to sensitive company data. Not only corporations, but increasingly also medium-sized companies, are being targeted by cybercriminals. They use manipulated audio recordings, for example, to obtain money by fraud. With the fake recordings, they imitate the voices of executives in a deceptively real way and instruct employees to transfer money to false bank accounts. These methods are also called CEO fraud or voice spoofing.

So-called read fakes, i.e., forged texts, are increasingly being used in such fraudulent schemes as well. New technologies are used to imitate the writing style and wording of CEOs. The result is, for example, phishing emails instructing employees to follow fraudulent links, disclose passwords or send sensitive data. In the context of corporate fraud, Deepfakes thus represent the next stage of social engineering. Employees are cleverly manipulated to unwittingly become the henchmen of fraudsters.

Scammers can also use Deepfake to circumvent camera-based authentication mechanisms, such as legitimacy checks and retina-scanner. This leads to a concern if Deepfake actors could ever be caught in the act if they can effortlessly jump over the available bar.

II. PORNOGRAPHY

Deepfake pornography prominently surfaced on the Internet in 2017, particularly on Reddit. A report published in October 2019 by Dutch cyber security start-up Deeptrace estimated that 96% of all Deepfakes online were pornographic.

If Deepfake is allowed to continue without relevant laws being in place, there is a high risk of abuse, loss of privacy and even mental distress that would come about as a result of it.

III. POLITICS

In politics, the ability to make it look as if a political ally or opponent said or did something they never did have advantages. Obvious applications come to mind, such as making it appear that your political opponent said something socially unacceptable, is suffering from a disability, or under the influence of an intoxicant.

In Africa, during his traditional New Year presidential address of 2019 in Gabon, President Ali Bongo was reported to look off during the video broadcast. This triggered suspicions in some quarters that this video broadcast was an AI-generated Deepfake of the President. The uncertainty created by this scenario within the context of the President’s illness led to an aborted coup attempt and Internet disruption in the central African country. There was no clear-cut evidence that this particular video was a Deepfake, but the very existence of the technology within the fragile political context existent in Gabon at the time created uncertainty.

Given the high levels of illiteracy on the continent, it is not far-fetched to imagine how a well-coordinated AI Deepfake campaign could stoke social unrest around elections, particularly in highly contested contexts like Gabon. Imagine a Deepfake video showing a prominent leader making inflammatory comments towards another ethnic or religious group, or of election, commissioners reading fake election results which then go viral and are widely accepted as original before being debunked. Africa has a long and illustrious history of violence and carnage around elections, and scenarios such as these can well be tinder for the fire.

The 2023 election in Nigeria would be a testing ground for how Nigerians would react when baffled with a truckload of synthetic and almost believable news. However, with this strange ability of Deepfake to easily affect the public-especially during election times. There would be a temptation to introduce irrelevant new laws that as shown repeatedly in recent history to do more harm than good for the technological development of a nation.

D. WHERE WE ARE LEGALLY

In USA, a bill was introduced by Rep. Yvette Clarke, (D-NY). The bill would impose an unnecessary burden on those creating First Amendment-protected media while doing little to deter motivated bad actors. Under Rep. Clarke’s bill, those seeking to make satirical Deepfake content would have to include labels for such content. Numerous online video creators would be burdened with a requirement that applies to legal material. Perhaps more concerning is the fact that this burden’s costs would be unlikely to be compensated with sufficient benefits. Watermarks and other labels attached to videos are relatively easy to remove. Those motivated to interfere with elections via Deepfake content would not find it difficult to circumvent the labelling requirement. Indeed, removing watermarks and identifying metadata can be automated. In addition, the presence of watermarks on media incurs a cost to content creators and their audience. As noted above, Deepfakes can be used in order to create valuable creative products. Part of the appeal of such products is the degree of realism and escapism Deepfake technology can provide. Watermark requirements or other labelling mandates would inevitably degrade the state of art, a significant cost that would come with little benefit.

Rep. Clarke’s bill is an example of the kind of Deepfake bill Nigerian lawmakers should seek to avoid. It is too broad, would impose a burden on those creating First Amendment-protected content, and would do little to mitigate the spread of damaging Deepfake content.

In 2019, California prohibited the use of deepfakes in election materials by specifically forbidding the malicious production or distribution of “materially deceptive” campaign materials within sixty days of an election. Effective until 2023, doctored images are considered deceptive if a reasonable person would have a fundamentally different understanding or impression of the content than that person would have of the original, unaltered image.

There is an high rate of ignorance of the concept of Deepfake despite the widespread exposure of its uses. Even the author of this article only learnt about the codification ‘Deepfake’ on 5 December 2021. That was despite seeing viral Deepfake of different form including a Deepfake of Zuckerberg, Meta’s founder. In a survey carried out by deepfake detection firm Sensity, it was found out that only 72% of Americans knew what deep fake really means. If this statistics is true, there would be a very heavy clog on the wheel of justice if and when cases arise relating to Deepfake.

Renowned legal practitioner Augustine Eigbedion brought to light certain relevant laws currently applicable in Nigeria for Deepfake.

Nigeria’s data protection and privacy regulatory framework stems from the fundamental right to privacy under Section 37 of the Constitution of the Federal Republic of Nigeria 1999 (as Amended). The breach to the privacy of individuals may generally be viewed as – an intrusion of personal life (with regards to how information was obtained); publicity given to private life; publicity in false light; and wrongful appropriation. As such, a victim of Deepfake may bring an action for breach of his constitutional right to privacy where such person can successfully persuade the court to construe the constitutional right to privacy as a right covering the intrusion of one’s private life particularly with respect to how photographs or videos are obtained and subsequently deepfaked.

The Cybercrime (Prohibition, Prevention, Etc.) Act, 2015 remains at the forefront in tackling criminal activities bordering on the cyber space. Section 24 of the Cybercrimes Act criminalizes any intentional dissemination of a message or other matter by means of computer systems or network that – is grossly offensive, pornographic or of an indecent, obscene or menacing character which such person knows to be false, for the purpose of causing annoyance, inconvenience danger, insult, injury, criminal intimidation, enmity, hatred, ill will or needless anxiety to another. Hence, the posting of Deepfakes in connection with the targeting of individuals, for example, where non-consensual pornographic Deepfakes are shared would be in violation of the provisions of the said Sections 24.

Again Sections 13, 14 & 22 of the Cybercrimes Act when collectively read, criminalizes activities relating to impersonation crimes (computer related forgery, fraud and identity theft), particularly where a person knowingly accesses any computer or network and inputs, alters, deletes or suppresses any data resulting in inauthentic data with the intention that such inauthentic data will be considered or acted upon as if it were authentic, or where such actions (alteration, inputs) causes any loss of property to another, whether or not for the purpose of conferring any economic benefits on himself or another person. The offence also covers instances where a person sends electronic message materially misrepresents any fact with intent to defraud another. It follows that where a Deepfake video is created and circulated for the purpose of causing “annoyance, inconvenience danger, obstruction, insult, injury, criminal intimidation, enmity, hatred, ill will or needless anxiety” to another, or where such video is used to perpetuate fraud, then the relevant provisions of the Cybercrime Act may be applicable in penalizing such actions.In addressing the harms that may be of a broader nature with respect to the society at large, Section 26 of the Cybercrimes Act criminalizes activities involving the creation or distribution of racist or xenophobic materials to the public through a computer system or network. So, a Deepfake containing a racist or hate speech is circulated to incite /spur an audience to violence would be captured under this provision of the Cybercrimes Act.

E. WHERE WE CAN BE

There would be the need for repealing and / or amendment of our existing legislations to cure latent ambiguities that may arise with regards to the applicability of those rules to Deepfakes and other emerging technologies. This cannot be over emphasized, as it is the rate at which the law is clarified or amended to overcome such hurdles that may be viewed as its pace of ‘adaptation’. This process will of necessity involve adopting measures that are built on flexible and inclusive processes that involve innovators, startups and established companies, regulators, experts and the public in the law-making/review process

Legislations alone wouldn’t be enough to bridge the gap between Deepfake as technological advancement and its regulation do not usually correspond. Individuals and Platforms also need to do their part to the growth of the legal landscape of Deepfake. Market-driven solutions would be most desirable. Companies running content dissemination platforms have the necessary technical expertise and sufficient resources to develop deep fake detecting technologies. Some deep fake technology companies already indicated that they are aware of ethical duties associated with their businesses. From a technology point of view, it may be argued that deep-fake detecting technologies could lead to the “race-to-the-bottom” situation where the technology used to generate deep fakes is as sophisticated as the technology used to detect them.

Also, Journalistic and fact-checking outlets such as Animal Politico, Code for Africa, Rappler, and Agence France-Presse have used Assembler, a media manipulation detection tool built by Jigsaw, a Google incubator. Journalists and academics have collaborated in efforts to address the spread of Deepfakes, with Duke University’s Reporters’ Lab, the News Integrity Initiative at CUNY’s Newmark School of Journalism, and Harvard’s Nieman Lab being among the academic institutions seeking to help journalists tackle Deepfake material.

The role of enlightenment is pivotal in any industry of any sort, most especially in Deepfake. Citizens should understand that Deepfake in itself isn’t illegal but the use would determine how it would. So tools, ingenuity and fact checking should always be at the fingertip when considering inflammatory or suspicious piece of content online. Consistently doing this will reduce the fertile ground for disinformation and inflaming disasters.

F. CONCLUSION

Deepfake is a new way of committing old crimes. And for this the law needs to be clearer on what is legal and illegal when it comes to deepfakes. From commercial concerns, such as who would own the rights to a deepfake video of a deceased celebrity, to cybercrime concerns, with the anonymous nature of the invention, and even the political question of who to hold responsible for deepfake, the known platform or the anonymous creator. The law must not take a backseat at a time like this.

The 2023 elections are near, and fake election results announcement by deepfaked Inec Chairman is possible, a news conference of the death of an important Government official is possible. Almost anything. We need to combine checks and balances that inhibit or prevent inappropriate use of technology while creating the right infrastructure and connections between different experts to ensure we develop technology that helps society thrive.

AUTHOR’S BRIEF

Testimony Orinayo AKINKUNMI

2023 ELECTIONS; DEEPFAKE AND A NEW WAY OF COMMUTING OLD CRIMES.

Testimony Akinkunmi Is a third year law student in University of Abuja. He takes interest in writing about fate, innovations and marketing. He plays Chess and is a recognised literary critic. He has also written about space law and arbitration. He can be contacted at testimony.akinkunmi@gmail.com. +2349036642392.

****************************************************************************************

This work is published under the free legal awareness project of Sabi Law Foundation (www.SabiLaw.org) funded by the law firm of Bezaleel Chambers International (www.BezaleelChambers.com). The writer was not paid or charged any publishing fee. You too can support the legal awareness projects and programs of Sabi Law Foundation by donating to us. Donate here and get our unique appreciation certificate or memento.

DISCLAIMER:

This publication is not a piece of legal advice. The opinion expressed in this publication is that of the author(s) and not necessarily the opinion of our organisation, staff and partners.

PROJECTS:

Take short courses, get samples/precedents and learn your rights at www.SabiLaw.org

Publish your legal articles for FREE by sending to: eve@sabilaw.org

Receive our free Daily Law Tips & other publications via our website and social media accounts or join our free whatsapp group: Daily Law Tips Group 6

KEEP IN TOUCH:

Get updates on all the free legal awareness projects of Sabi Law (#SabiLaw) and its partners, via:

YouTube: SabiLaw

Twitter: @Sabi_Law

Facebook page: SabiLaw

Instagram: @SabiLaw.org_

WhatsApp Group: Free Daily Law Tips Group 6

Telegram Group: Free Daily Law Tips Group

Facebook group: SabiLaw

Email: lisa@sabilaw.org

Website: www.SabiLaw.org

ABOUT US & OUR PARTNERS:

This publication is the initiative of the Sabi Law Foundation (www.SabiLaw.org) funded by the law firm of Bezaleel Chambers International (www.BezaleelChambers.com). Sabi Law Foundation is a Not-For-Profit and Non-Governmental Legal Awareness Organization based in Nigeria. It is the first of its kind and has been promoting free legal awareness since 2010.

DONATION & SPONSORSHIP:

As a registered not-for-profit and non-governmental organisation, Sabi Law Foundation relies on donations and sponsorships to promote free legal awareness across Nigeria and the world. With a vast followership across the globe, your donations will assist us to increase legal awareness, improve access to justice, reduce common legal disputes and crimes in Nigeria. Make your donations to us here or contact us for sponsorship and partnership, via: lisa@SabiLaw.org or +234 903 913 1200.

*********************************************************************************